Textures add an important portion of realism to ray tracers. Using just plain colors is not enough to create real world scenes and this is why it is almost a must for every ray tracer.

For these two assigments, I implemented texture mapping, perlin noise textures and bump mapping. CUDA helped me, it does once in a while, for texture operations such as fetching, filtering and setting up many sampling parameters. Figuring out which function to use and how to use it was a bit painful but now all is clear and I will talk about them in the "Lessons learned" section. Also, I implemented the improved perlin noise to produce procedural textures.

Bump mapping is relatively easy when you add the texturing functionality to your ray tracer. However, there is one big problem when dealing with the tangent vectors and the surface normal. How are you going to transform them if you are using instancing? Transforming the normal is well-known but what about others? Of course, we will not transform the tangent vectors in the way we transform the normals. Say, you figured out that you should use the transformation matrix M but not the inverse-transpose of M. However, it will work unless you have a transformation matrix whose determinant is negative. If your transformation matrix has a negative determinant then everything changes. Thanks to one of my friend who gave me this information, I was able to solve the problem by taking tangent vectors from object space to world space by transforming them with M and after that by multiplying one of the tangent vectors by the sign of the determinant of M.

Let us see the outputs of this week.

|

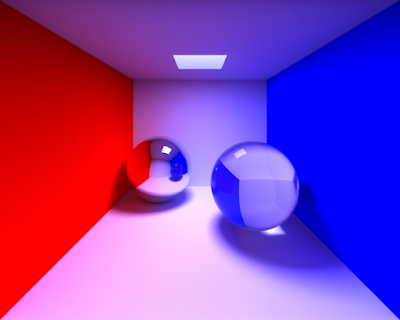

output of simple_texture.xml

kernel execution time: 9.7 milliseconds |

|

output of the skybox.xml

kernel execution time: 923 milliseconds |

|

output of ellipsoids_texture.xml

kernel execution time: 11.4 milliseconds |

|

output of sphere_texture_blend_bilinear.xml

kernel execution time: 10.7 milliseconds |

|

output of sphere_texture_replace_nearest.xml

kernel execution time: 10.8 milliseconds |

|

output of sphere_texture_replace_bilinear.xml

kernel execution time: 10.9 milliseconds |

|

output of killeroo_diffuse_specular_texture.xml

kernel execution time: 492 milliseconds |

|

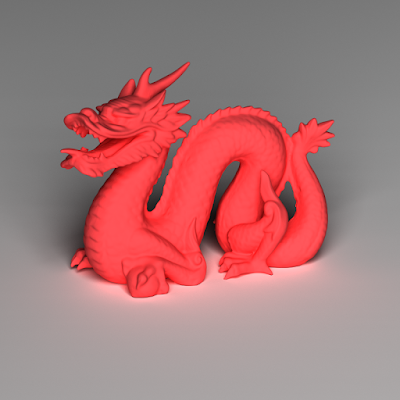

output of perlin_types.xml

kernel execution time: 14.1 milliseconds |

|

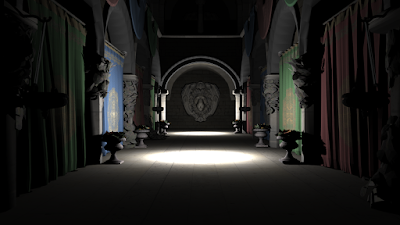

output of bump_mapping_basic.xml

kernel execution time: 350 milliseconds |

|

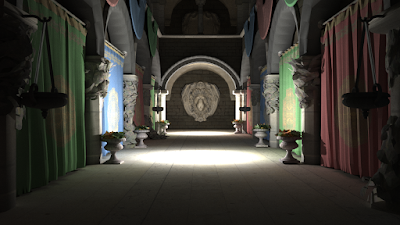

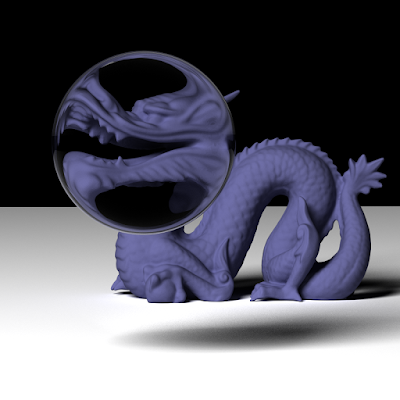

output of bump_mapping_transformed.xml

kernel execution time: 335 milliseconds |

|

output of killeroo_bump_walls.xml

kernel execution time: 515 milliseconds |

|

output of sphere_bump_nobump.xml

kernel execution time: 192 milliseconds |

Lessons learned:

- I stored the random number sequence for the perlin noise in the constant memory. Since the numbers are constant during the execution, constant memory is the fast and appropriate solution.

- Texturing with CUDA is really simple. The only confusing part is to use which function and how. First of all, I recommend you to implement a texture manager class which manages all the textures loaded for your scene. Your scene file may use the same texture more than once and you might create space for the same image for many times. Following code snippet summarizes how to create a texture in CUDA.

cudaArray* cuda_array = nullptr;

//1-Create and fill a channel description.

cudaChannelFormatDesc channel_desc = cudaCreateChannelDesc(8, 8, 8, 8, cudaChannelFormatKindUnsigned); //RGBA

HANDLE_ERROR(cudaMallocArray(&cuda_array, &channel_desc, image_width, image_height));

HANDLE_ERROR(cudaMemcpyToArray(cuda_array, 0, 0, bits, //"bits" is the pointer to your image on the host memory

image_width * image_height * 4, cudaMemcpyHostToDevice));

//2-Create and fill a resource description.

cudaResourceDesc res_desc;

memset(&res_desc, 0, sizeof(res_desc));

res_desc.resType = cudaResourceTypeArray;

res_desc.res.array.array = cuda_array;

//3-Create and fill a texture description.

cudaTextureDesc tex_desc;

memset(&tex_desc, 0, sizeof(tex_desc));

tex_desc.addressMode[0] = cudaAddressModeClamp;

tex_desc.addressMode[1] = cudaAddressModeClamp;

tex_desc.filterMode = cudaFilterModeLinear;

tex_desc.readMode = cudaReadModeNormalizedFloat;

tex_desc.normalizedCoords = 1;

//4-Create the texture object

cudaTextureObject_t texture = 0;

HANDLE_ERROR(cudaCreateTextureObject(&texture, &res_desc, &tex_desc, nullptr));

..

..

..

..

//Fetch a pixel.

float4 pixel = tex2D<float4>(texture , u, v);

Beware that we do not bind any texture before using it since we are using texture objects but not texture references. To understand the difference and which parameter does what, you should see the

documentation.